Artificial Intelligence (AI) has arisen as a wildly disruptive technology across many industries. As AI models continue to improve, more industries are sure to be disrupted and affected. One industry that is already feeling the effects of AI is digital security. The use of this new technology has opened up new avenues of protecting data, but it has also caused some concerns about its ethicality and effectiveness when compared with what we will refer to as traditional or established security practices.

This article will touch on the ways that this new tech is affecting already established practices, what new practices are arising, and whether or not they are safe and ethical.

How does AI affect already established security practices?

It is a fair statement to make that AI is still a nascent technology. Most experts agree that it is far from reaching its full potential, yet even so, it has still been able to disrupt many industries and practices. In terms of already established security practices, AI is providing operators with the opportunity to analyze huge amounts of data at incredible speed and with impressive accuracy. Identifying patterns and detecting anomalies is easy for AI to do, and incredibly useful for most traditional data security practices.

Previously these systems would rely solely on human operators to perform the data analyses, which can prove time-consuming and would be prone to errors. Now, with AI help, human operators need only understand the refined data the AI is providing them and act on it.

In what ways can AI be used to bolster and improve existing security measures?

AI can be used in several other ways to improve security measures. In terms of access protection, AI-driven facial recognition and other forms of biometric security can easily provide a relatively foolproof access protection solution. Using biometric access can eliminate passwords, which are often a weak link in data security.

AI’s ability to sort through large amounts of data means that it can be very effective in detecting and preventing cyber threats. An AI-supported network security program could, with relatively little oversight, analyze network traffic, identify vulnerabilities, and proactively defend against any incoming attacks.

The difficulties in updating existing security systems with AI solutions

The most pressing difficulty is that some old systems are simply not compatible with AI solutions. Security systems designed and built to be operated solely by humans are often not able to be retrofitted with AI algorithms, which means that any upgrades necessitate a complete, and likely expensive, overhaul of the security systems.

One industry that has been quick to embrace AI-powered security systems is the online gambling industry. For those who are interested in seeing what AI-driven security can look like, visiting a casino online and investigating its security protocols will give you an idea of what is possible. Having an industry that has been an early adoption of such a disruptive technology can help other industries learn what to do and what not to do. In many cases, online casinos staged entire overhauls of their security suites to incorporate AI solutions, rather than trying to incorporate new tech, with older non-compatible security technology.

Another important factor in the difficulty of incorporating AI systems is that it takes a very large amount of data to properly train an AI algorithm. Thankfully, other companies are doing this work, and it should be possible to buy an already trained AI, fit to purpose. All that remains is trusting that the trainers did their due diligence and that the AI will be effective.

Effectiveness of AI-driven security systems

AI-driven security systems are, for the most part, lauded as being effective. With faster threat detection and response times quicker than humanly possible, the advantage of using AI for data security is clear.

AI has also proven resilient in terms of adapting to new threats. AI has an inherent ability to learn, which means that as new threats are developed and new vulnerabilities emerge, a well-built AI will be able to learn and eventually respond to new threats just as effectively as old ones.

It has been suggested that AI systems must completely replace traditional data security solutions shortly. Part of the reason for this is not just their inherent effectiveness, but there is an anticipation that incoming threats will also be using AI. Better to fight fire with fire.

Is using AI for security dangerous?

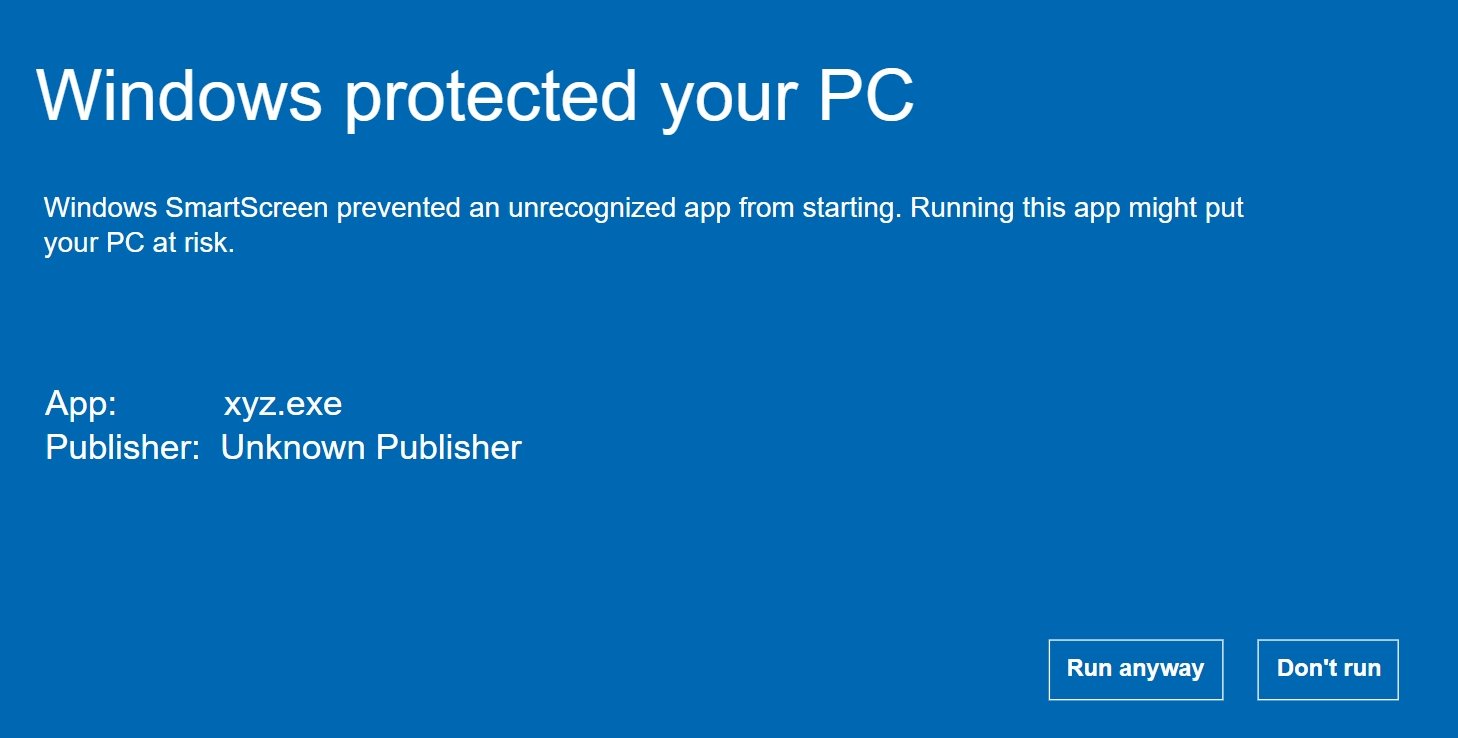

The short answer is no, the long answer is no, but. The main concern when using AI security measures with little human input is that they could generate false positives or false negatives. AI is not infallible, and despite being able to process huge amounts of data, it can still get confused.

It could also be possible for the AI security system to itself be attacked and become a liability. If an attack were to target and inject malicious code into the AI system, it could see a breakdown in its effectiveness which would potentially allow multiple breaches.

The best remedy for both of these concerns is likely to ensure that there is still an alert human component to the security system. By ensuring that well-trained individuals are monitoring the AI systems, the dangers of false positives or attacks on the AI system are reduced greatly.

Are there legitimate ethical concerns when AI is used for security?

Yes. The main ethical concern relating to AI when used for security is that the algorithm could have an inherent bias. This can occur if the data used for the training of the AI is itself biased or incomplete in some way.

Another important ethical concern is that AI security systems are known to sort through personal data to do their job, and if this data were to be accessed or misused, privacy rights would be compromised.

Many AI systems also have a lack of transparency and accountability, which compounds the problem of the AI algorithm’s potential for bias. If an AI is concluding that a human operator cannot understand the reasoning, the AI system must be held suspect.

Conclusion

AI could be a great boon to security systems and is likely an inevitable and necessary upgrade. The inability of human operators to combat AI threats alone seems to suggest its necessity. Coupled with its ability to analyze and sort through mountains of data and adapt to threats as they develop, AI has a bright future in the security industry.

However, AI-driven security systems must be overseen by trained human operators who understand the complexities and weaknesses that AI brings to their systems.

Working as a cyber security solutions architect, Alisa focuses on application and network security. Before joining us she held a cyber security researcher positions within a variety of cyber security start-ups. She also experience in different industry domains like finance, healthcare and consumer products.