While it would be difficult for the vast majority of people to develop a concrete definition of the concept of “deepfake”, it is a fact that many of us have heard on more than one occasion about this technology and even know some of its possible malicious implications. As mentioned in previous occasions, its main use is the creation of fake pornographic material, featuring the faces of celebrities or even former sentimental couples of some user seeking revenge, which does not mean that it does not have other real applications.

In addition to pornography, this technology is also used in politics, creating false or intentionally misleading news, such as a popular video clip in which Barak Obama refers to the former President Donald Trump as “a complete idiot.” Shortly thereafter it was shown that the video was created from multiple Obama images and a speech by actor and director Jordan Peele, proving that its use could have serious implications for users’ data protection.

Deepfake has even been used by the artistic community. An example of this was the exhibition in honor of the 115th anniversary of Salvador Dalí’s birth date, in which the curators projected a prototype of the artist driven by artificial intelligence that was even able to interact with the attendees.

This technology may seem very complex, although there are already some attempts to make it available to any user. This time, data protection specialists from the International Institute of Cyber Security (IICS) will show you how to create a deepfake image from a simple photo or video of the face you want to emulate.

This process requires image animation, which is possible through neural networks that cause the image to move over a video stream previously selected by the user. It should be mentioned that the content of this article was developed for specifically academic purposes, so the principle of data protection must prevail in the use of this knowledge. IICS is not responsible for any misuse that may be given to this information.

How does this process work?

Data protection experts mention that deepfake is based on adverse generative neural networks (GAN), machine learning algorithms responsible for creating new content from a particular and limited set of information, for example by analyzing thousands of photographs of a person to create new images that preserve all the physical traits of the selected person.

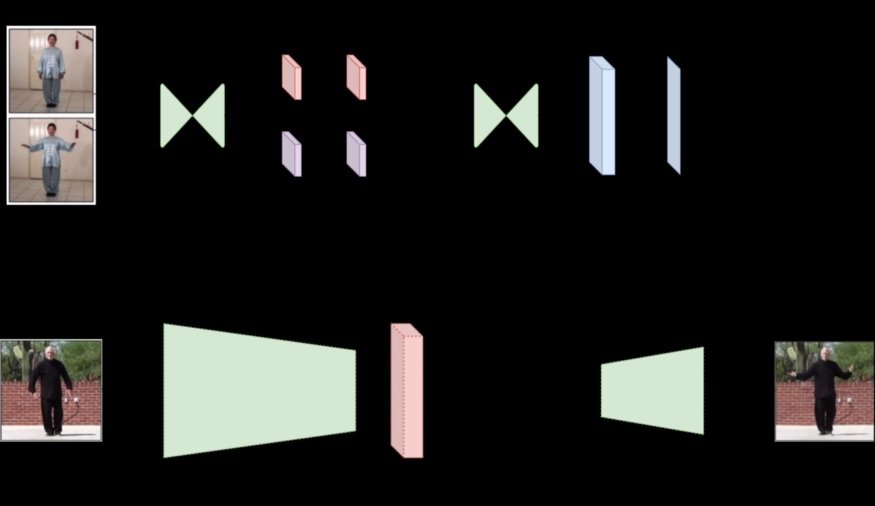

In this case, the model presented in First Order Motion Model for Image Animation is detailed, an approach that will replace the ways in which previously worked, dependent on replacing objects in a video with other images.

Using this model, the neural network helps reconstruct a video, where the original subject is replaced by another object in the original image. During the test, the program tries to predict how the object will move in the original image based on the video added, so in practice it is tracked to the smallest movement presented in the video, from turns of the head to the movement of the cornering of the lips.

Creating a deepfake video

It all starts by testing a lot of video samples, mentioned by data protection experts. To start video rebuilding, the model extracts multiple frames to analyze the contained motion patterns and then learn how to encode the motion as a mixture of multiple variables.

During testing, the model reconstructs the video stream by adding an object from the original image to each frame of the video, and therefore an animation is created.

The framework is implemented by using a motion estimation module and an image generation module.

The purpose of the motion evaluation module is to understand exactly how the different frames in the video move, mentioned by data protection experts. In other words, you try to track each movement and code it to move key points. As a result, a dense field of motion is obtained by working in conjunction with an occlusal mask that determines which parts of the object should be replaced by the original image.

In the GIF file used as an example below, the woman’s back is not animated.

Finally, the data obtained by the motion estimation module is sent to the image generation module along with the original image and the selected video file. The imager creates moving video frames with the original image object replaced. Frames come together to create a new video later.

How to create a deepfake

You can easily find the source code of a deepfake tool on GitHub, clone it on your own machine and run everything there; however, there is an easier way that allows you to get the finished video in just 5 minutes.

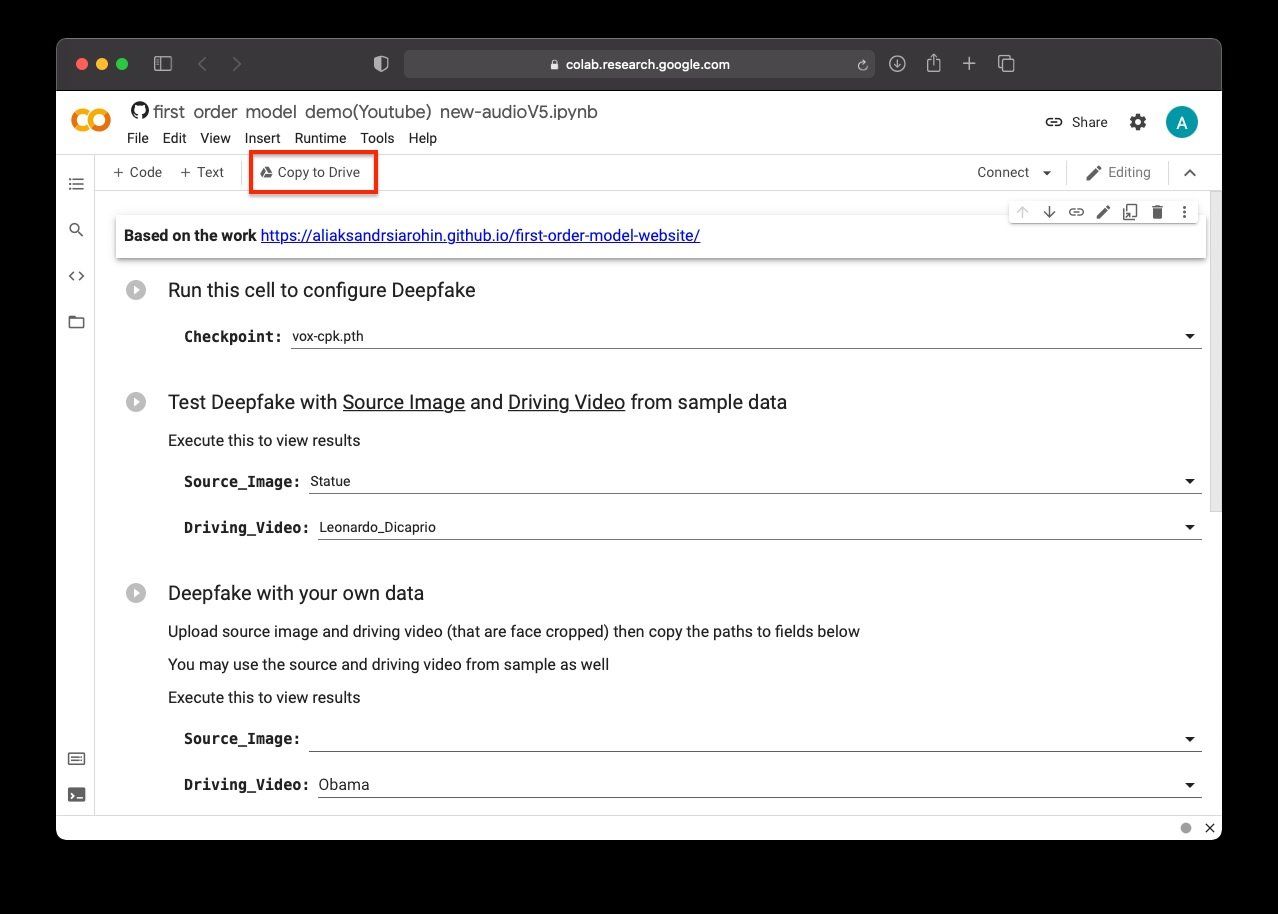

- Follow the next link:

- Then create a copy of the ipynb file on your Google drive

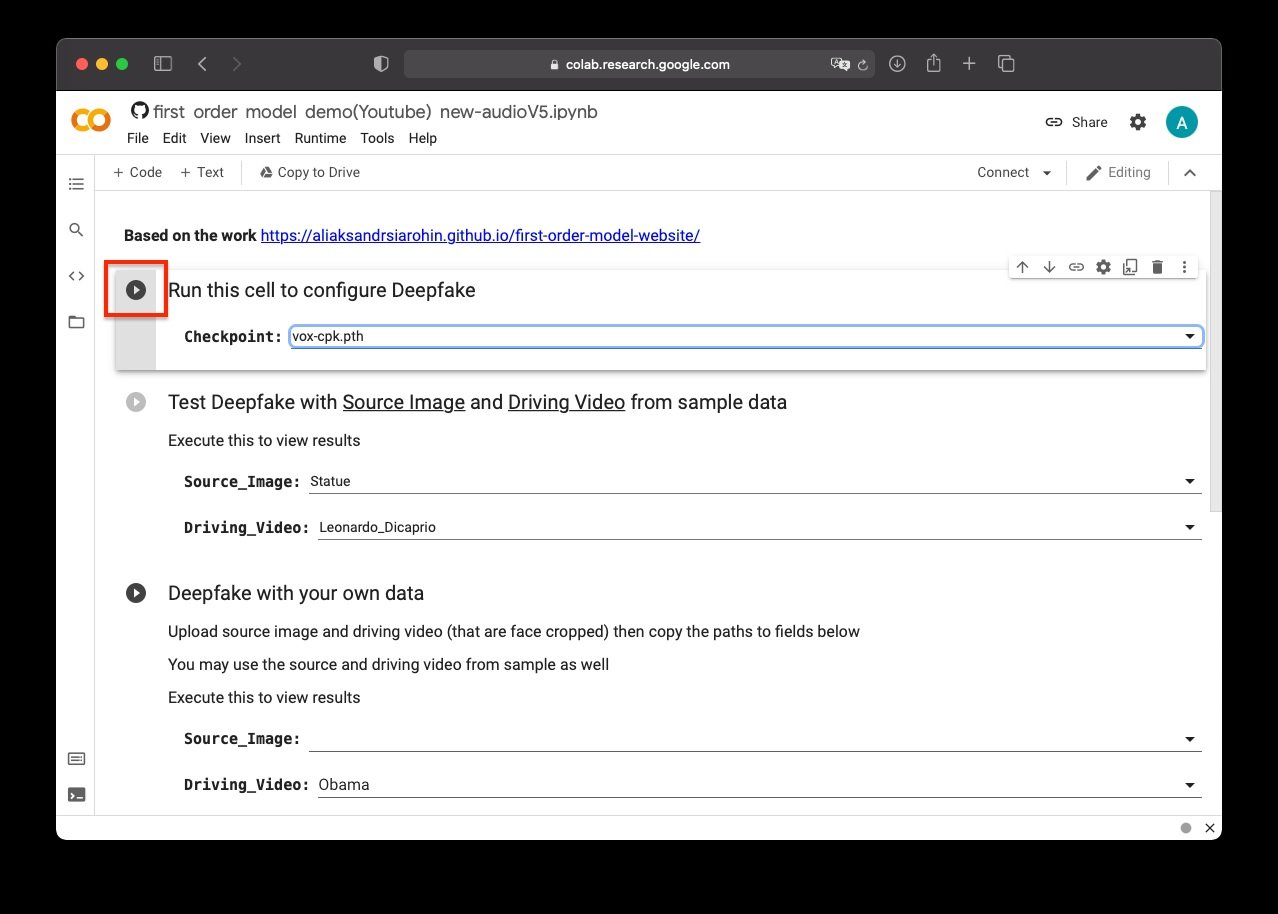

- Run the first process to download all the necessary resources and configure the model parameters

- You can now test the algorithm using a default set of photos and videos. Just select a source image and the video in which you want to project the image. According to data protection experts, after a couple of minutes the deepfake material will be ready

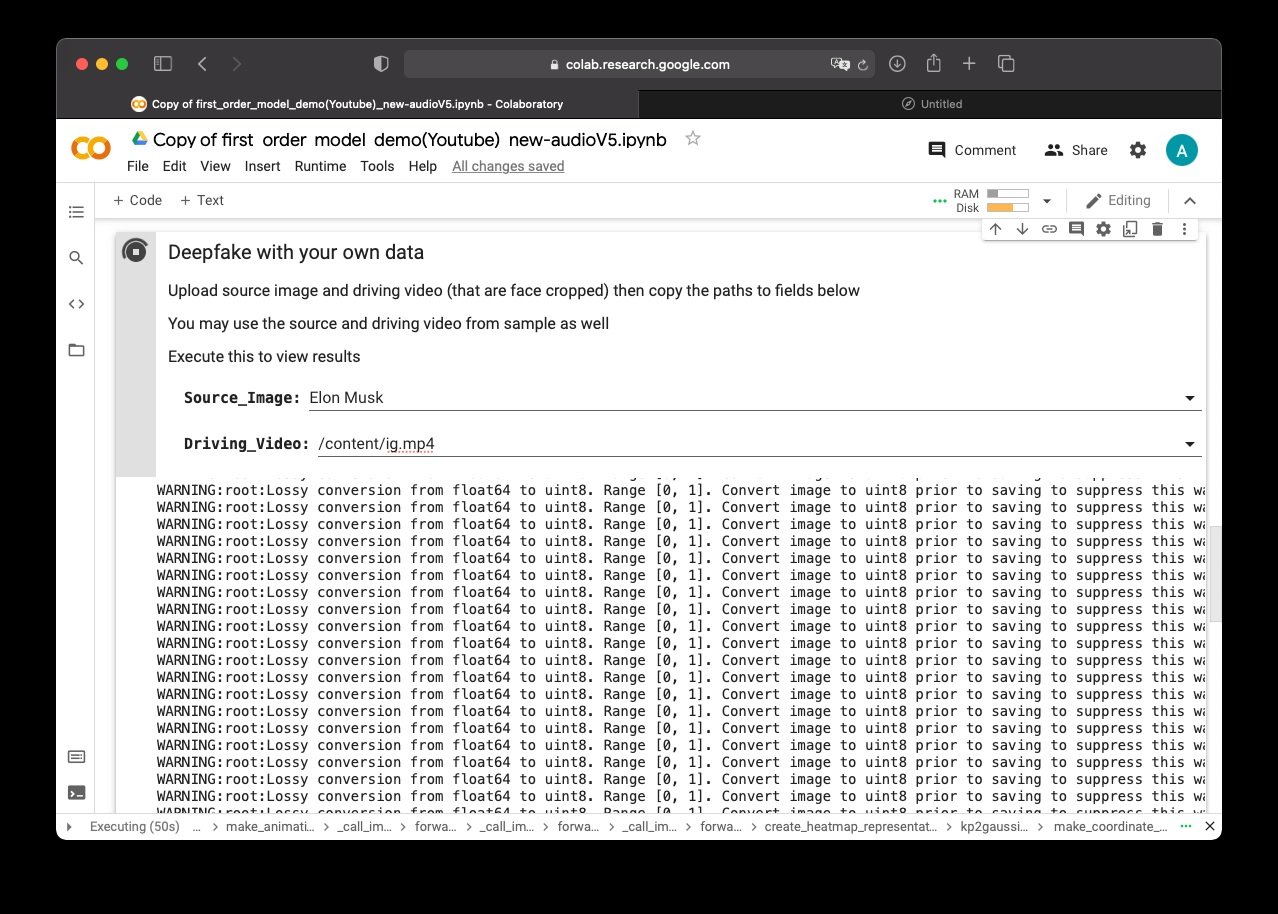

- To create your own video, specify the path to the original image and moving video in the third cell. You can download them directly to the folder with the model, which can be opened by clicking the folder icon in the menu on the left. It is important that your video is in mp4 format

As a result, by combining the video with Ivangai and Elon Musk’s photo, we managed to get the following deepfake:

To learn more about data protection, information security risks, malware variants, vulnerabilities and information technologies, feel free to access the International Institute of Cyber Security (IICS) websites.

He is a well-known expert in mobile security and malware analysis. He studied Computer Science at NYU and started working as a cyber security analyst in 2003. He is actively working as an anti-malware expert. He also worked for security companies like Kaspersky Lab. His everyday job includes researching about new malware and cyber security incidents. Also he has deep level of knowledge in mobile security and mobile vulnerabilities.