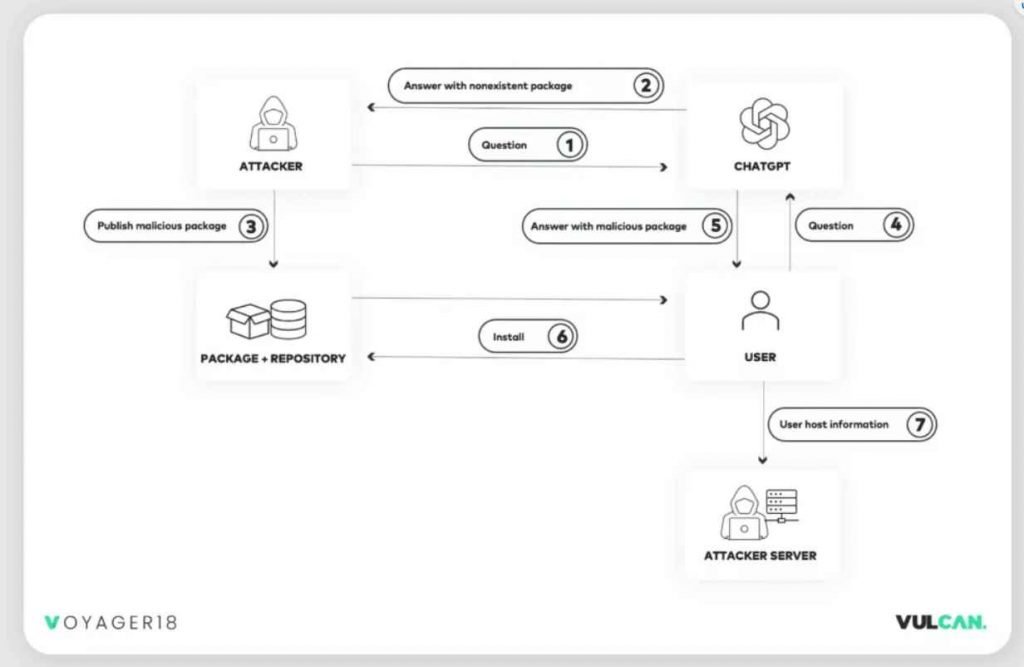

According to the findings of recent study conducted, harmful packages may be readily propagated into development environments with the assistance of ChatGPT, which can be used by attackers.

In a blog post published, researchers from Vulcan Cyber outlined a novel method for propagating malicious packages that they dubbed “AI package hallucination.” The method was conceived as a result of ChatGPT and other generative AI systems providing phantasmagoric sources, links, blogs, and data in response to user requests on occasion. Large-language models (LLMs) like ChatGPT are capable of generating “hallucinations,” which are fictitious URLs, references, and even whole code libraries and functions that do not exist in the real world. According to the researchers, ChatGPT will even produce dubious patches to CVEs and, in this particular instance, would give links to code libraries that do not even exist.

If ChatGPT produces phony code libraries (packages), then attackers may exploit these hallucinations to disseminate harmful packages without utilizing common tactics such as typosquatting or masquerade, according to the researchers from Vulcan Cyber who worked on this study. “Those techniques are suspicious and already detectable,” the researchers claimed in their conclusion. However, if the attacker is able to construct a package that can replace the ‘fake’ programs that are suggested by ChatGPT, then they may be successful in convincing a victim to download and install the malicious software.

This ChatGPT attack approach demonstrates how simple it has become for threat actors to utilize ChatGPT as a tool to carry out an attack.We should expect to continue to see risks like this associated with generative AI and that similar attack techniques could be used in the wild. This is something that we should be prepared for. The technology behind generative artificial intelligence is still in its infancy, so this is only the beginning. When seen through the lens of research, it is possible that we will come across a large number of new security discoveries in the months and years to come. Companies should never download and run code that they don’t understand and haven’t evaluated. This includes executing code from open-source GitHub repositories or now ChatGPT suggestions. Teams should do a security analysis on every code they wish to execute, and the team should have private copies of the code.

ChatGPT is being used as a delivery method by the adversaries in this instance. However, the method of compromising a supply chain by making use of shared or imported libraries from a third party is not a new one. The only way to defend against it would be to apply secure coding methods, as well as to extensively test and review code that was meant for usage in production settings.

According to experts, “the ideal scenario is that security researchers and software publishers can also make use of generative AI to make software distribution more secure”. The industry is in the early phases of using generative AI for cyber attack and defense.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.