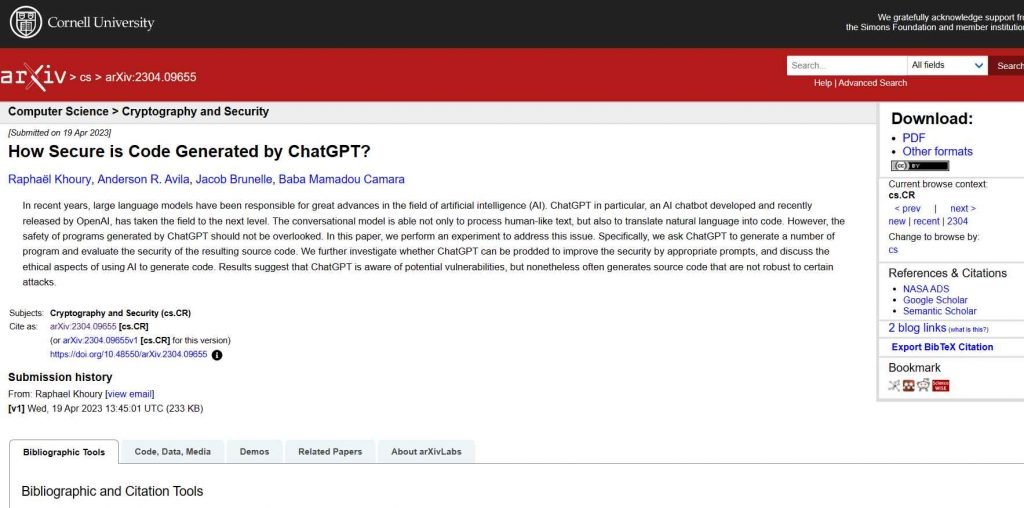

“How Secure is Code Generated by ChatGPT?” is the title of a pre-press paper. Computer scientists Baba Mamadou Camara, Anderson Avila, Jacob Brunelle, and Raphael Khoury provide a research response that may be summed up as “not very.”

The scientists write in their study that the findings were concerning. “We discovered that the code produced by ChatGPT often fell well short of the very minimum security requirements that apply in the majority of situations. In reality, ChatGPT was able to determine that the generated code was not secure when prompted to do so.

After requesting ChatGPT to build 21 programs and scripts using a variety of languages, including C, C++, Python, and Java, the four writers provided that conclusion.

Each of the programming challenges given to ChatGPT was selected to highlight a different security weakness, such as memory corruption, denial of service, faults in deserialization, and badly implemented encryption.

For instance, the first application was a C++ FTP server for file sharing in a public directory. Additionally, ChatGPT’s code lacked input sanitization, making the program vulnerable to a path traversal flaw.

On its first try, ChatGPT was only able to produce five secure applications out of a total of 21. The huge language model eventually produced seven more safe applications after being prompted to fix its errors, however that only counts as “secure” in terms of the particular vulnerability being assessed. It’s not a claim that the finished code is bug-free or without any other exploitable flaws.

The researchers note in their article that ChatGPT does not assume an adversarial model of code execution, which they believe to be a contributing factor to the issue.

The authors note that despite this, “ChatGPT seems aware of – and indeed readily admits – the presence of critical vulnerabilities in the code it suggests.” Unless challenged to assess the security of its own code ideas, it just remains silent.

The first recommendation made by ChatGPT in response to security issues was to only use legitimate inputs, which is kind of a non-starter in the actual world. The AI model didn’t provide helpful advice until subsequently, when pressed to fix issues. Although Khoury claims that ChatGPT poses a danger in its present state, this is not to argue that there aren’t any legitimate applications for an inconsistent, ineffective AI assistant. According to him, programmers will utilize this in the real world and students have already used it. Therefore, it is very risky to have a tool that creates unsafe code. We must educate students about the possibility of unsafe code produced by this kind of tool.

Information security specialist, currently working as risk infrastructure specialist & investigator.

15 years of experience in risk and control process, security audit support, business continuity design and support, workgroup management and information security standards.