Religious beliefs, political leanings, and medical conditions are up for grabs.

OAKLAND, Calif.—In the beginning, people hacked phones. In the decades to follow, hackers turned to computers, smartphones, Internet-connected security cameras, and other so-called Internet of things devices. The next frontier may be your brain, which is a lot easier to hack than most people think.

At the Enigma security conference here on Tuesday, University of Washington researcher Tamara Bonaci described an experiment that demonstrated how a simple video game could be used to covertly harvest neural responses to periodically displayed subliminal images. While her game, dubbed Flappy Whale, measured subjects’ reactions to relatively innocuous things, such as logos of fast food restaurants and cars, she said the same setup could be used to extract much more sensitive information, including a person’s religious beliefs, political leanings, medical conditions, and prejudices.

“Electrical signals produced by our body might contain sensitive information about us that we might not be willing to share with the world,” Bonaci told Ars immediately following her presentation. “On top of that, we may be giving that information away without even being aware of it.”

Flappy Whale had what Bonaci calls a BCI, short for “brain-connected interface.” It came in the form of seven electrodes that connected to the player’s head and measured electroencephalography signals in real time. The logos were repeatedly displayed, but only for milliseconds at a time, a span so short that subjects weren’t consciously aware of them. By measuring the brain signals at the precise time the images were displayed, Bonaci’s team was able to glean clues about the player’s thoughts and feelings about the things that were depicted.

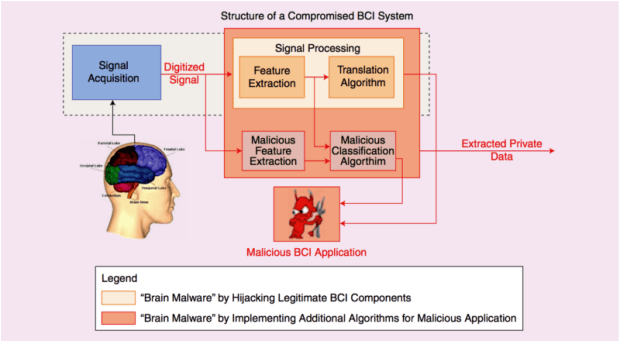

There’s no evidence that such brain hacking has ever been carried out in the real world. But the researcher said it wouldn’t be hard for the makers of virtual reality headgear, body-connected fitness apps, or other types of software and hardware to covertly mine a host of physiological responses. By repeatedly displaying an emotionally charged image for several milliseconds at a time, the pilfered data could reveal all kinds of insights about a person’s most intimate beliefs. Bonaci has also theorized that sensitive electric signals could be obtained by modifying legitimate BCI equipment, such as those used by doctors.

Bonaci said that electrical signals produced by the brain are so sensitive that they should be classified as personally identifiable information and subject to the same protections as names, addresses, ages, and other types of PII. She also suggested that researchers and game developers who want to measure the responses for legitimate reasons should develop measures to limit what’s collected instead of harvesting raw data. She said researchers and developers should be aware of the potential for “spillage” of potentially sensitive data inside responses that might appear to contain only mundane or innocuous information.

“What else is hidden in an electrical signal that’s being used for a specific purpose?” she asked the audience, which was largely made up of security engineers and technologists. “In most cases when we measure, we don’t need the whole signal.”

Source:https://arstechnica.com

Working as a cyber security solutions architect, Alisa focuses on application and network security. Before joining us she held a cyber security researcher positions within a variety of cyber security start-ups. She also experience in different industry domains like finance, healthcare and consumer products.